There’s an extraordinary amount of hype around “AI” right now, perhaps even greater than in past cycles, where we’ve seen an AI bubble about once per decade. This time, the focus is on generative systems, particularly LLMs and other tools designed to generate plausible outputs that either make people feel like the response is correct, or where the response is sufficient to fill in for domains where correctness doesn’t matter.

But we can tell the traditional tech industry (the handful of giant tech companies, along with startups backed by the handful of most powerful venture capital firms) is in the midst of building another “Web3”-style froth bubble because they’ve again abandoned one of the core values of actual technology-based advancement: reason.

It doesn’t matter what you consider, they are absolutely a form of AI. In both definition and practice.

tell me how… they are dumb as fuck and follow a stupid algo… the data make them somewhat smart, that’s it. They don’t learn anything by themselves… I could do that with a few queries and a database.

You could do that with a few queries and a database lol. How do you think LLMs work? It seems you don’t know very much about them.

deleted by creator

deleted by creator

This isn’t an argument in the way you think it is. Something being “dumb” doesn’t exclude it from possessing intelligence. My most metrics toddlers are “dumb” but no one would ever suggest in seriousness that any person lacks intelligence in the literal sense. And having low intelligence is not the same as lacking it.

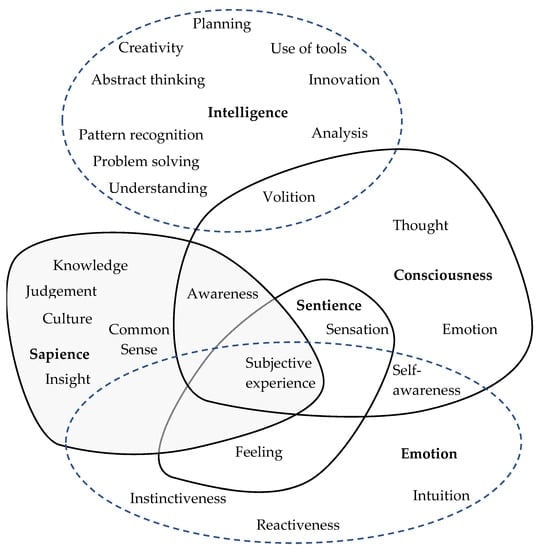

Can you even define intelligence? I would honestly hazard a guess that by “intelligence” you really mean sapience. The discussion of what is intelligence, sapience, or sentience is far more than you’d expect.

than you’d expect.

Our brains literally run on an algorithm.

And where’s the intelligence in people without the data we learn?

I don’t know what you even mean by this. Everything learns with external input.

The hell you could! This statement demonstrates you have absolutely no clue what you’re talking about. LLMs learn and process information in a method extremely close to how biological neurons function. We’re just using digital computation instead of analogue (the way all biology works).

LLMs have regularly demonstrated genuine creativity and even some emergent properties. They are able to learn certain “concepts” (I put concepts in quotes, because that’s not the right word here) that we as humans intrinsically know. Things like “a knight in armour” are likely to refer to a man, because historically it was entirely men that became knights, outside of a few recorded instances.

It can also learn general distances between cities/locations based on the text itself. Like New York city and Houston being closer to each other than Paris.

No, you 100% absolutely in no way ever could do the same thing with a database and a few queries.