I’m sorry to disappoint you, in that this is a consumer motherboard. So yes, AMD PSP exists, no iLO.

However, that’s where the bad part ends.

Behold, what is in my opinion, the most server-like MicroATX board released to the consumers: the MSI PRO B550M-VC WIFI Micro ATX AM4 Motherboard/alternate link.

If you followed the link and read the specifications you would know exactly what I’m talking about, but for people who didn’t, here is the summary:

- 4 x16 ports (3 of them work at x1 speeds, the one closest to the CPU is

PCI Express 5.0 x16). - 8 SATA3 ports (people who wanted to build a NAS should be visibly salivating at this point).

Apart from that, there are 2 nice features that I would personally like to point out, as I look for these features in every board:

- 128GB of RAM supported (no ECC, and I suppose a lot of motherboards support it now, but nice to have).

- A

2230 E-keyslot.- I know a few of you might be wondering why I’m mentioning the slot used for a WiFi card in this post - I invite you to take a look at this.

- It’s a link to an Aliexpress item, so if you don’t want to click, here’s a short version: It’s an E-key to 2.5Gbe converter, using a

Realtek RTL8125Bchip. It’s amazing, I learnt about these adapters from a random Level1Techs video. I think a lot of people could use this.

- It’s a link to an Aliexpress item, so if you don’t want to click, here’s a short version: It’s an E-key to 2.5Gbe converter, using a

- I know a few of you might be wondering why I’m mentioning the slot used for a WiFi card in this post - I invite you to take a look at this.

And there you have it. If you’re building a system that requires heavy PCIe access and a lot of SATA3 storage, I think this is the best value you can find when purchasing new.

Cheers

- The first PCIe x16 slot should be 4.0 only. PCIe 5.0 is not supported until AM5.

- Most AM4 CPUs support ECC memory. So you can use DDR4 ECC UDIMM with this board. The problem is that the ECC feature support is incomplete.

- Half of the 8 SATA3 ports are from the chipset while the remaining half is from AM1064.

Thanks for pointing that out. Newegg and Microcentre say it’s PCIe 5.0, but maybe I got the board wrong. I’ll correct it (Lemmy.world has been horrible today; I can’t edit posts, can’t reply to anyone and am having a generally awful experience).

I didn’t know about the ECC memory, thanks.

I don’t see the problem in using different chips if you’re using an FS that deals directly with the drives, unless the chip is an unreliable one

4 x16 ports (3 of them work at x1 speeds

What even does this mean? Is it 64 pcie 4 in 4 slots, each x16 ? is it 1x16 and 3x1 ? if the former so that’s awesome, the latter boring (bar 8 sata) but what cpu even supports the former. Threadripper microATX?

It means its a waste of money

It’s MSI, not surprising.

Simply put, the physical ports are x16 compatible, but they can only support one x16 connection along with 3 x1 connections

No ECC is (and should be) a complete deal breaker for any self hosted data store.

There are just so many budget friendly options that do support it these days, I can’t imagine making that sacrifice.

If you’re talking about requiring ECC for ZFS, your point is arguable.

With that said, could you point me to the newer AM4/AM5, LGA1200/LGA1700 boards which support ECC?

ZFS doesn’t need ECC more than any other filesystem. Technically it needs it less. But what it does do is expose just how common memory errors are.

ECC exists for a reason at the enterprise level. A very important reason. You need to be able to trust that the data that the CPU put in memory is the same as the data written to disk.

Would you have any suggestions for newer consumer boards which support ECC?

Don’t buy a consumer board for a server unless you aren’t using it to store important data. Like, say, a GPU cloud gaming or Plex server would be fine on consumer hardware ifyouf dont need out of band management.

Also ECC RDIMM is much easier to come by than ecc udimm but only works with epyc or Xeon chips. I say if you’re going for storage then definitely buy enterprise gear and if you’re going for raw CPU/GPU compute you should be fine with consumer hardware.

If you just want am4/5 ryzen chips, asrock rack makes some good boards with IPMI.

here’s their x570 board you can browse their site and they also have am5 boards and they dont have three gimped x16@x1 sockets.

The problem with enterprise hardware is:

- If it’s old, it’s not efficient.

- If it’s new, it’s prohibitively expensive.

Consumer hardware solves both of these problems. Yes, we don’t have iLO, but if someone is really motivated, they can use PiKVM. I am yet to figure out if I can run PiKVM without the hats on a different SBC but I think it can be done.

For me personally, I’ll be using said board in a NAS. With this board, I would no longer need an LSI HBA hogging my x16 port, which means if I ever decide to train ML models, I can get a GPU for myself.

The Asrock Rack series is exciting, but from what I have seen, that line of motherboards are really expensive. I’ll keep a look out though, I find one of their motherboards under $150 which fits my needs, and it will become my number 1 choice.

I do not see why I absolutely need ECC memory for a NAS. I’m not going to store PBs of media/documents, it’ll likely be under 30TB (that’s a conservative estimate). I thought ECC memory is a nice-to-have (this is no enterprise workload).

Cheers

If it’s old, it’s not efficient.

Efficiency is relative. I’m not suggesting you get a high clock much core server chip (though you could technically limit the clock speed and TDP and it would use as much power as a typical desktop) as there’s plenty of low power options that are ‘old’ (read:~4 years old is not that old). Maybe look into some Xeon-D embedded boards solely for your storage system. Many of those boards were made specifically for storage appliances. They can also be had pretty cheap on ebay or wherever.

If it’s new, it’s prohibitively expensive.

I’d say $120 is too expensive for this motherboard. Seems like it should be ~$60 with those specs and not to mention it being last gen. So even though you’re buying new you have an upgrade ceiling so why not buy a year or two older gear with more features and expansion.

Consumer hardware solves both of these problems. Yes, we don’t have iLO, but if someone is really motivated, they can use PiKVM. I am yet to figure out if I can run PiKVM without the hats on a different SBC but I think it can be done.

FYI iLO is HPs out of band (IPMI) implementation. PiKVM is definitely cool but its just adding more cost and another point of failure in your setup.

For me personally, I’ll be using said board in a NAS. With this board, I would no longer need an LSI HBA hogging my x16 port, which means if I ever decide to train ML models, I can get a GPU for myself.

If you want to train models and other gpu compute stuff like that, I would definitely shoot for a more current gen box just for that. In my (good) opinion, you should not run heavy compute loads on a server that is also serving/backing up your data,

I do not see why I absolutely need ECC memory for a NAS. I’m not going to store PBs of media/documents, it’ll likely be under 30TB (that’s a conservative estimate). I thought ECC memory is a nice-to-have (this is no enterprise workload).

ESPECIALLY if you’re not going to use ECC memory. No reason to put your important data at risk of corruption like that. I highly recommend holding out for something simple with DDR5 and a discrete GPU of your choosing for any actual compute workloads like that.

Prices for newer hardware like this may fall before you’re even ready to build this system, so keep that in mind. You’ll also have a much easier time selling it over older gen hardware in the future if you change your mind about whatever.

A 2230 E-key slot.

from original post. why would you want to do this in a server? if you got a different board with sockets that werent x1 you could just get a 2.5gbe card… or you know, 10gbit.

Thank you for the tip. I will look into Xeon-D integrated motherboards. I will not be running very heavy loads (other than a Suricata instance for an IDS/traffic analyser - I would love suggestions which might be lighter on compute - which might be heavy). The idea for training ML models was just a remote possibility.

My apologies, I kept saying iLO/iDRAC when I meant IPMI.

Why do you suggest having separate devices for storage/compute?

My idea was to run FreeBSD on a ZFS mirror of NVME drives as the base, and run VMs/Jails on a pool of SATA SSDs. These would exist alongside HDDs but would otherwise not affect their functioning. In this scenario, how does having 2 machines make my infrastructure more reliable, other than FreeBSD not running as intended?

Have you had instances of memory corruption because you didn’t use ECC? I was under the impression from r/selfhosted that this problem was blown out of proportion.

The reason I mentioned the E-key slot is because that way, I don’t have to use a PCIe slot for the adapter, which I might use for something else. I have no need for 10Gbe.

Thanks!

What CPU you recommend to pair with the motherboard? Ideally something with low idle power consumption.

Unless you are adding a discrete GPU, your options are limited to APUs which only have two models in Zen 3: 5600G and 5700G.

You dont need a GPU for ssh or web services. Yeah you’d need one to set it up I guess. Actually idk it might depend on your bios but if your mobo is any good it should have an ignore GPU failure warning.

While it would work no doubt, I do not recommend it. It complicates management in case things go real astray especially nowadays most consumer motherboards do no expose RS232 or any serial port. It is also more flexible and convenient for homelab machines to have some sort of GPU capability.

Eh, this looks like a repost bot. probably from Reddit 🤷♂️

I’m not a bot. I don’t know why my profile says so.

@MigratingtoLemmy @poVoq exactly what a bot would say 🤔

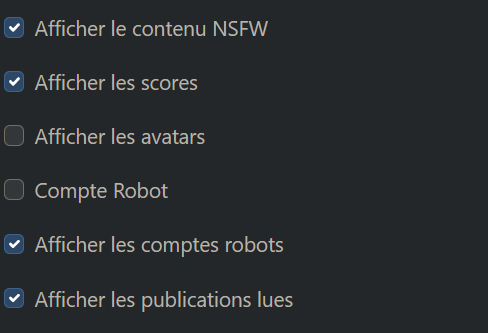

Can you check if my account still comes up as a bot account?

Still showing up with this ‘B’ status beside your username

I’ve toggled it off though

It looks fine from lemmy.world instance, but not my personal instance. I had this issue with my Display Name as well at other instances, may take a while for it to take effect

You can remove the bot symbol by unchecking the “Bot Account” box in Settings.

Lemmy.world has become very unresponsive for some reason. Can you check if I’m still tagged as a bot account?

It’s a b550M chipset so Ryzen 1st gen is a good cheap choice, however a lot of them didn’t have integrated graphics so you would need a video card to be able to boot. My current server has a Gt730, you just need any video card so that POST is happy. That said if you’re planning on using this in a build it’s nothing too interesting. Sure it has a lot of ports but that’s about it, IMO you would get more mileage out of a cheap server motherboard.

Either a 4600G or a 5600G because I would use this as a NAS + virtualisation host. Others might have better recommendations.

I have posted this comment 10 times and I still can’t see it being a reply to your comment, please let me know if you can see it

I can see it!